供应商管理

供应商列表

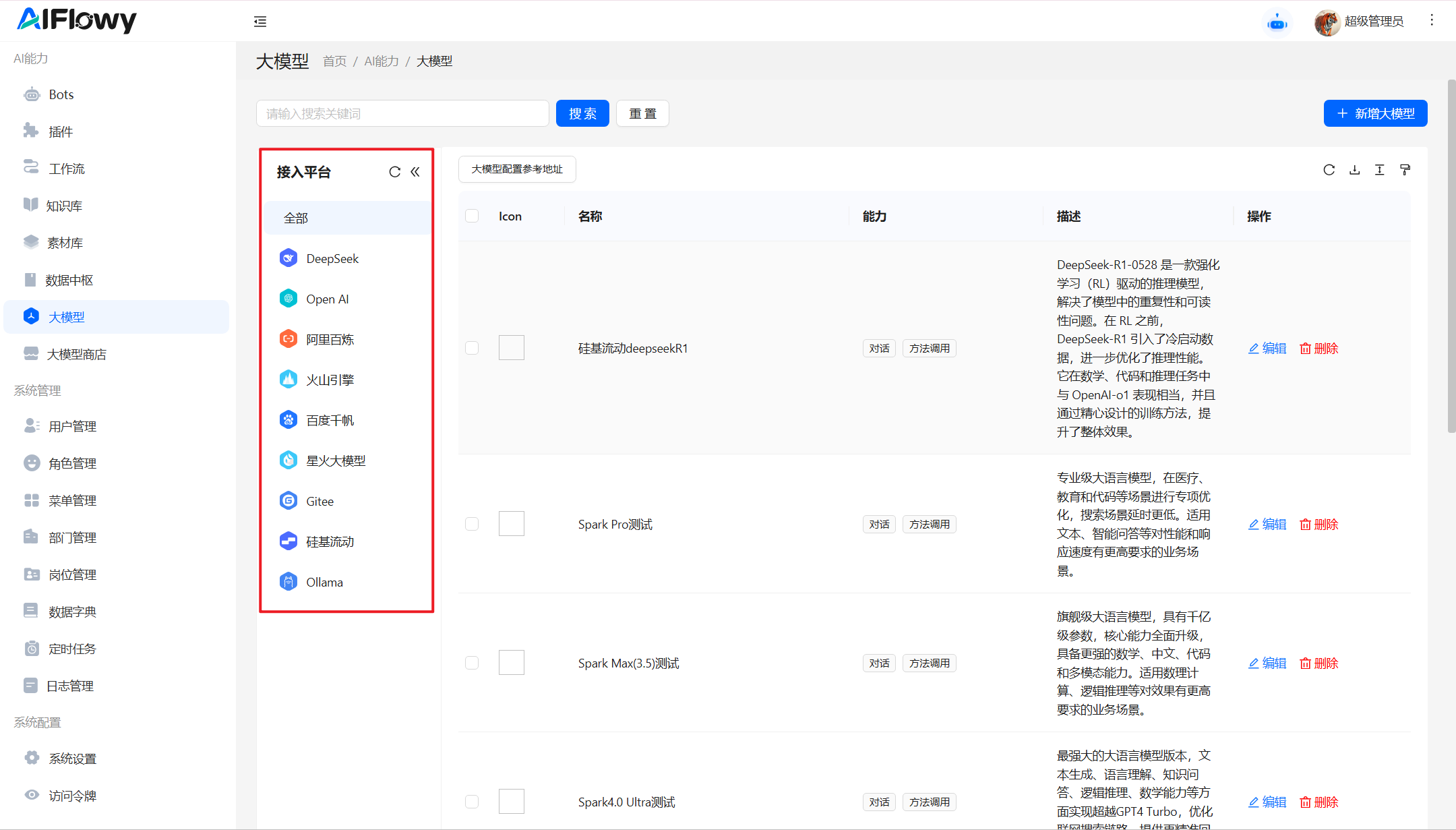

在大模型管理界面的左侧,有系统已支持的供应商列表  点击指定的供应商,右侧列表会显示当前系统中已配置的此平台的大模型有哪些。

点击指定的供应商,右侧列表会显示当前系统中已配置的此平台的大模型有哪些。

添加新的供应商

供应商列表配置在在后端 aiflowy-starter → src → main → resource → ai-brands.json 中,系统默认初始配置如下

json

[

{

"title": "DeepSeek",

"key": "deepseek",

"options":{

"llmEndpoint":"https://api.deepseek.com",

"chatPath":"/chat/completions",

"modelList":[

{

"llmModel":"deepseek-reasoner",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1-0528",

"description":"DeepSeek-R1-0528 是一款强化学习(RL)驱动的推理模型,解决了模型中的重复性和可读性问题。在 RL 之前,DeepSeek-R1 引入了冷启动数据,进一步优化了推理性能。它在数学、代码和推理任务中与 OpenAI-o1 表现相当,并且通过精心设计的训练方法,提升了整体效果"

},

{

"llmModel":"deepseek-chat",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-V3-0324",

"description":"新版 DeepSeek-V3 (DeepSeek-V3-0324)与之前的 DeepSeek-V3-1226 使用同样的 base 模型,仅改进了后训练方法。新版 V3 模型借鉴 DeepSeek-R1 模型训练过程中所使用的强化学习技术,大幅提高了在推理类任务上的表现水平,在数学、代码类相关评测集上取得了超过 GPT-4.5 的得分成绩。此外该模型在工具调用、角色扮演、问答闲聊等方面也得到了一定幅度的能力提升。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>Deep Seek</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -240.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"DeepSeek\" transform=\"translate(16, 64)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#4C6CFF\"></path>\n <path d=\"M17.9883214,9.01580701 C17.9533799,8.42770822 17.464198,9.10491289 17.2632841,9.19401877 C16.9400746,9.34549876 16.634336,9.26530347 16.3111265,9.36331994 C16.1102126,9.42569405 15.9617109,9.59499522 15.7782677,9.6841011 C15.6734431,9.57717405 15.6647077,9.41678346 15.5860892,9.28312465 C15.3677044,8.93561172 14.9134641,8.90887996 14.686344,8.62374115 C14.5203716,8.41879763 14.5553131,8.16039058 14.3718699,8 L14.3194576,8 C14.0137189,8.0712847 13.926365,8.54354586 13.8914235,8.83759526 C13.8040696,9.67519051 14.2058975,10.4325905 14.8523164,10.9137622 C14.8523164,11.4483975 14.6251962,11.5107716 14.1884267,11.0741528 C13.5507433,10.4504117 12.9217952,9.83558109 12.2316994,9.29203524 C12.0831977,9.12273407 12.0307854,8.9534329 12.1705516,8.74848938 C12.9043244,8.15147999 12.0395208,8.03564235 11.5153974,8.19603293 C10.7554184,8.54354586 10.1089996,8.61483056 9.28787289,8.48117174 C7.62814877,8.48117174 6.12566167,9.89795521 6.02957238,11.5820563 C5.99463082,11.956301 5.98589543,12.3305457 6.02957238,12.7047904 L6.02957238,12.7760751 C6.16060323,14.8255103 7.91641665,16.7412867 9.91682098,16.9551408 L10.2487658,16.9818725 C10.991274,17.0442466 11.7425175,16.9462302 12.4413487,16.6789125 L12.4588195,16.6789125 C12.5723796,16.6343596 12.6772043,16.5898067 12.7820289,16.5363431 C12.9130598,16.4650584 13.4284478,16.0551714 13.4721248,16.0462608 C13.5070663,16.0462608 13.7953342,16.1620985 13.9001589,16.1888302 C14.2146329,16.2422937 15.559883,16.3759526 15.5074707,15.8502279 C15.4812645,15.5829102 14.6688732,15.4046985 14.4504884,15.2888608 C14.4155469,15.2710397 14.3456638,15.2888608 14.3806053,15.199755 C14.4504884,15.0393644 14.9134641,14.5849244 15.0619658,14.3710703 C15.7083846,13.4621903 16.0228587,12.3929198 16.0403294,11.2880069 C16.0403294,11.0117787 16.4072158,11.0652422 16.634336,10.9761363 C17.3943149,10.6820869 18.0494692,9.88013403 17.9970568,9.00689642 L17.9883214,9.01580701 Z M13.2275338,15.9838867 C12.0307854,16.1353667 11.6289574,15.4225197 10.746683,14.8789738 C10.4671506,14.7096726 9.75958396,14.2374115 9.93429176,14.9502585 C9.99543949,15.2086656 10.3623259,15.502715 9.9867041,15.6720161 C9.58487616,15.8591385 8.9297219,15.4670726 8.60651246,15.2264867 C7.74170884,14.5671032 7.0690838,13.4087268 6.88564061,12.3216351 C6.85069905,12.0988704 6.70219742,11.3682022 6.96425912,11.2880069 C7.8640043,11.0206893 9.30534367,11.6622516 10.0303811,12.2057975 C11.2183941,13.0968562 11.7425175,14.5849244 12.8344413,15.5918208 C12.9130598,15.6631055 13.2362692,15.8680491 13.2624754,15.8947808 C13.2624754,15.8947808 13.2799462,16.0106185 13.2362692,16.0017079 L13.2275338,15.9838867 Z M12.5461734,12.259261 C12.7470874,12.4642045 12.3539948,12.6513268 12.2928471,12.4374727 C12.2579055,12.3038139 12.4238779,12.1345128 12.5461734,12.259261 Z M13.926365,13.1503198 C13.6730387,13.426548 12.9654721,13.2037833 12.8868536,12.8562704 C12.8257059,12.5889527 12.9916783,12.2414398 12.7034104,12.0721386 C12.5461734,11.9830328 12.02205,11.9741222 12.2666409,11.7068045 C12.5985857,11.3414704 13.2187984,11.8315528 13.4633894,12.1166916 C13.611891,12.2859927 14.1010728,12.972108 13.926365,13.1592304 L13.926365,13.1503198 Z\" id=\"形状\" fill=\"#FFFFFF\" fill-rule=\"nonzero\"></path>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "Open AI",

"key": "openai",

"options":{

"llmEndpoint":"https://api.openai.com",

"chatPath":"/v1/chat/completions",

"embedPath":"/v1/embeddings",

"modelList":[

{

"llmModel":"o4-mini",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"o4-mini",

"description":"O4-mini 是OpenAi最新的小型 O 系列型号。它针对快速、有效的推理进行了优化,在编码和可视化任务中具有非常高效的性能。"

},

{

"llmModel":"o3",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"o3",

"description":"O3 是一个全面而强大的跨领域模型。它为数学、科学、编码和视觉推理任务设定了新标准。它还擅长技术写作和指导遵循。使用它来思考涉及跨文本、代码和图像分析的多步骤问题。"

},

{

"llmModel":"o3-pro",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"o3-pro",

"description":"o 系列模型经过强化学习训练,在回答和执行复杂推理之前先思考。o3-pro 模型使用更多的计算来更深入地思考并始终提供更好的答案。"

},

{

"llmModel":"o3-mini",

"supportChat":true,

"supportFunctionCalling":true,

"title":"o3-mini",

"description":"O3-mini 是OpenAi最新的小型推理模型,以与 O1-mini 相同的成本和延迟目标提供高智能。o3-mini 支持关键的开发人员功能,如结构化输出、函数调用和批处理 API。"

},

{

"llmModel":"GPT-4.1",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"GPT-4.1",

"description":"GPT-4.1 是OpenAi用于复杂任务的旗舰模型。它非常适合跨领域解决问题。"

},

{

"llmModel":"GPT-4o",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"GPT-4o",

"description":"GPT-4o(“o”代表“omni”)是OpenAi多功能、高智能的旗舰模型。它接受文本和图像输入,并生成文本输出(包括结构化输出)。它是大多数任务的最佳模型,也是 o 系列模型之外功能最强大的模型"

},

{

"llmModel":"text-embedding-3-small",

"supportEmbed":true,

"title":"text-embedding-3-small",

"description":"text-embedding-3-small 是 ADA 嵌入模型的改进版,性能更高。嵌入是文本的数字表示形式,可用于度量两段文本之间的相关性。嵌入对于搜索、聚类、推荐、异常检测和分类任务非常有用"

},

{

"llmModel":"text-embedding-3-large",

"supportEmbed":true,

"title":"text-embedding-3-large",

"description":"text-embedding-3-large 是OpenAi最强大的嵌入模型,适用于英语和非英语任务。嵌入是文本的数字表示形式,可用于度量两段文本之间的相关性。嵌入对于搜索、聚类、推荐、异常检测和分类任务非常有用。"

},

{

"llmModel":"text-embedding-ada-002",

"supportEmbed":true,

"title":"text-embedding-ada-002",

"description":"text-embedding-ada-002 是 ADA 嵌入模型的改进版,性能更高。嵌入是文本的数字表示形式,可用于度量两段文本之间的相关性。嵌入对于搜索、聚类、推荐、异常检测和分类任务非常有用。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>Open AI</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -288.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"Open AI\" transform=\"translate(16, 112)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#00C1D4\"></path>\n <g id=\"openai-fill\" transform=\"translate(6, 6)\" fill=\"#FFFFFF\" fill-rule=\"nonzero\">\n <rect id=\"矩形\" opacity=\"0\" x=\"0\" y=\"0\" width=\"12\" height=\"12\"></rect>\n <path d=\"M10.281,5.094 C10.4060039,4.74999609 10.4375039,4.40599219 10.4060039,4.06250391 C10.3749961,3.7185 10.2500039,3.375 10.0935,3.06249609 C9.81249609,2.5935 9.40599609,2.2185 8.93750391,2.00000391 C8.4375,1.78100391 7.90599609,1.71849609 7.37499609,1.8435 C7.125,1.59350391 6.84350391,1.37499609 6.531,1.21850391 C6.21849609,1.06299609 5.84349609,0.999952093 5.49999609,0.999952093 C4.96343795,0.996866259 4.43920265,1.16077989 3.99999609,1.46900391 C3.5625,1.78100391 3.24999609,2.21900391 3.09350391,2.71899609 C2.71850391,2.8125 2.406,2.96900391 2.09349609,3.15650391 C1.81250391,3.375 1.59351562,3.65648437 1.40601563,3.9375 C1.12501172,4.40649609 1.03101563,4.93749609 1.09351172,5.469 C1.15630801,5.99895515 1.37366874,6.49871405 1.71850781,6.906 C1.60031873,7.23617933 1.5576086,7.58864407 1.59351563,7.93749609 C1.62501563,8.2815 1.75000781,8.625 1.90600781,8.93750391 C2.18751563,9.4065 2.59351172,9.7815 3.06250781,9.99999609 C3.56251172,10.2189961 4.09351172,10.2815039 4.62501563,10.1565 C4.87501172,10.4064961 5.15601563,10.6250039 5.46850781,10.7814961 C5.78101172,10.9374961 6.15601172,11.0000479 6.50001563,11.0000479 C7.03657377,11.0031337 7.56080907,10.8392201 8.00001563,10.5309961 C8.43751172,10.2185039 8.75001563,9.78099609 8.90601563,9.28100391 C9.25907784,9.21387679 9.59117389,9.06393395 9.87501563,8.84349609 C10.1559961,8.625 10.4060039,8.37500391 10.5624961,8.0625 C10.8435,7.59350391 10.9374961,7.06250391 10.875,6.531 C10.8125039,6 10.6250039,5.49999609 10.281,5.094 Z M6.531,10.3440019 C6.03099609,10.3440019 5.65599609,10.1874961 5.31249609,9.90650391 C5.31249609,9.90650391 5.34350391,9.87500391 5.37500391,9.87500391 L7.37499609,8.71850391 C7.43082467,8.69392633 7.47542243,8.64932858 7.5,8.5935 C7.53099609,8.53100391 7.53099609,8.49999609 7.53099609,8.4375 L7.53099609,5.625 L8.37500391,6.125 L8.37500391,8.4375 C8.39237852,8.93741415 8.20574869,9.42290714 7.85798479,9.78245728 C7.51022089,10.1420074 7.03121548,10.3447091 6.531,10.3440019 L6.531,10.3440019 Z M2.49999609,8.625 C2.28099609,8.25 2.18750391,7.81250391 2.28099609,7.37499609 C2.28099609,7.37499609 2.31249609,7.40649609 2.34350391,7.40649609 L4.34349609,8.56250391 C4.39153122,8.58788664 4.44588533,8.59882665 4.5,8.59400391 C4.56249609,8.59400391 4.62500391,8.59400391 4.656,8.56250391 L7.0935,7.15599609 L7.0935,8.12499609 L5.0625,9.31250391 C4.63704346,9.55893138 4.1311577,9.62637101 3.65600391,9.50000391 C3.156,9.375 2.75000391,9.06249609 2.49999609,8.625 Z M1.96851563,4.281 C2.18957542,3.90784535 2.53144079,3.62137269 2.93751562,3.46900781 L2.93751562,5.844 C2.93751562,5.90599219 2.93751562,5.96900781 2.96851172,6 C2.99308929,6.05582858 3.03768705,6.10042633 3.09351562,6.12500391 L5.53101562,7.5315 L4.6875,8.03150391 L2.68749609,6.87500391 C2.25718123,6.63203718 1.94206694,6.22688663 1.81250391,5.75000391 C1.6875,5.28099609 1.71849609,4.71849609 1.96850391,4.281 L1.96851563,4.281 Z M8.87500781,5.87499609 L6.43750781,4.46849609 L7.28101172,3.96849609 L9.28101563,5.12499609 C9.59350781,5.31249609 9.84351563,5.56250391 10.0000078,5.87499609 C10.1560078,6.1875 10.2500156,6.53150391 10.2185156,6.90650391 C10.1880628,7.25840584 10.057986,7.59434936 9.84351563,7.875 C9.62500781,8.15649609 9.34351172,8.37500391 9.00001172,8.49999609 L9.00001172,6.12500391 C9.00001172,6.06249609 9.00001172,6 8.96851172,5.96849609 C8.96851172,5.96849609 8.93750781,5.90650781 8.87500781,5.87499609 L8.87500781,5.87499609 Z M9.71851172,4.62500391 C9.71851172,4.62500391 9.68751563,4.59350391 9.65601563,4.59350391 L7.65601172,3.4375 C7.59351563,3.40599609 7.56250781,3.40599609 7.50001172,3.40599609 C7.43751563,3.40599609 7.37500781,3.40599609 7.34350781,3.4375 L4.90600781,4.84400391 L4.90600781,3.87500391 L6.9375,2.68749609 C7.25000391,2.49999609 7.59350391,2.4375 7.96850391,2.4375 C8.31249609,2.4375 8.65599609,2.56250391 8.9685,2.78150391 C9.24999609,3 9.50000391,3.28149609 9.62499609,3.594 C9.74998828,3.90650391 9.78099609,4.28150391 9.7185,4.62500391 L9.71851172,4.62500391 Z M4.4685,6.375 L3.62499609,5.87499609 L3.62499609,3.531 C3.62499609,3.1875 3.7185,2.8125 3.906,2.53100391 C4.0935,2.21900391 4.37499609,2.00000391 4.6875,1.84400391 C5.00597592,1.68413346 5.36698742,1.62950923 5.71850391,1.68800391 C6.06249609,1.71950391 6.40599609,1.87550391 6.68750391,2.09450391 C6.68750391,2.09450391 6.65600391,2.1255 6.62499609,2.1255 L4.62499609,3.282 C4.56917533,3.30657757 4.52457757,3.35117533 4.5,3.40700391 C4.4685,3.4695 4.4685,3.50049609 4.4685,3.56300391 L4.4685,6.37550391 L4.4685,6.375 Z M4.90599609,5.37500391 L6,4.74999609 L7.0935,5.37500391 L7.0935,6.62499609 L6,7.24999609 L4.90599609,6.62499609 L4.90599609,5.37500391 L4.90599609,5.37500391 Z\" id=\"形状\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "阿里百炼",

"key": "aliyun",

"options":{

"llmEndpoint":"https://dashscope.aliyuncs.com",

"chatPath":"/compatible-mode/v1/chat/completions",

"embedPath":"/compatible-mode/v1/embeddings",

"rerankPath":"/api/v1/services/rerank/text-rerank/text-rerank",

"modelList":[

{

"llmModel":"qwen-plus",

"supportChat":true,

"supportFunctionCalling":true,

"title":"通义千问-Plus",

"description":"Qwen3系列Plus模型,实现思考模式和非思考模式的有效融合,可在对话中切换模式。推理能力显著超过QwQ、通用能力显著超过Qwen2.5-Plus,达到同规模业界SOTA水平。"

},

{

"llmModel":"qwen-turbo",

"supportChat":true,

"supportFunctionCalling":true,

"title":"通义千问-Turbo",

"description":"Qwen3系列Turbo模型,实现思考模式和非思考模式的有效融合,可在对话中切换模式。推理能力以更小参数规模比肩QwQ-32B、通用能力显著超过Qwen2.5-Turbo,达到同规模业界SOTA水平。"

},

{

"llmModel":"qwen-max",

"supportChat":true,

"supportFunctionCalling":true,

"title":"通义千问-Max",

"description":"通义千问2.5系列千亿级别超大规模语言模型,支持中文、英文等不同语言输入。随着模型的升级,qwen-max将滚动更新升级。"

},

{

"llmModel":"deepseek-r1-distill-qwen-7b",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1-Distill-Qwen-7B",

"description":"DeepSeek-R1-Distill-Qwen-7B是一个基于Qwen2.5-Math-7B的蒸馏大型语言模型,使用了 DeepSeek R1 的输出。"

},

{

"llmModel":"deepseek-r1-distill-qwen-14b",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1-Distill-Qwen-14B",

"description":"DeepSeek-R1-Distill-Qwen-14B是一个基于Qwen2.5-14B的蒸馏大型语言模型,使用了 DeepSeek R1 的输出。"

},

{

"llmModel":"deepseek-r1-distill-qwen-32b",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1-Distill-Qwen-32B",

"description":"DeepSeek-R1-Distill-Qwen-32B是一个基于Qwen2.5-32B的蒸馏大型语言模型,使用了 DeepSeek R1 的输出。"

},

{

"llmModel":"qwen-vl-max",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"通义千问VL-Max",

"description":"通义千问VL-Max(qwen-vl-max),即通义千问超大规模视觉语言模型。相比增强版,再次提升视觉推理能力和指令遵循能力,提供更高的视觉感知和认知水平。在更多复杂任务上提供最佳的性能。"

},

{

"llmModel":"qwen-vl-plus",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"通义千问VL-Plus",

"description":"通义千问VL-Plus(qwen-vl-plus),即通义千问大规模视觉语言模型增强版。大幅提升细节识别能力和文字识别能力,支持超百万像素分辨率和任意长宽比规格的图像。在广泛的视觉任务上提供卓越的性能。"

},

{

"llmModel":"qvq-max",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"通义千问-QVQ-Max",

"description":"通义千问QVQ视觉推理模型,支持视觉输入及思维链输出,在数学、编程、视觉分析、创作以及通用任务上都表现了更强的能力。"

},

{

"llmModel":"qvq-plus",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"通义千问-QVQ-Plus",

"description":"通义千问QVQ视觉推理模型增强版,支持视觉输入及思维链输出,在数学、编程、视觉分析、创作以及通用任务上都表现了更强的能力。"

},

{

"llmModel":"text-embedding-v4",

"supportEmbed":true,

"title":"通用文本向量-v4",

"description":"通义实验室基于Qwen3训练的多语言文本统一向量模型,相较V3版本在文本检索、聚类、分类性能大幅提升;在MTEB多语言、中英、Code检索等评测任务上效果提升15%~40%;支持64~2048维用户自定义向量维度。"

},

{

"llmModel":"通用文本向量-v3",

"supportEmbed":true,

"title":"text-embedding-v4",

"description":"通用文本向量,是通义实验室基于LLM底座的多语言文本统一向量模型,面向全球多个主流语种,提供高水准的向量服务,帮助开发者将文本数据快速转换为高质量的向量数据。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>阿里云</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -384.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"阿里云\" transform=\"translate(16, 208)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#FF6A3D\"></path>\n <g id=\"编组-5\" transform=\"translate(6, 8)\" fill=\"#FFFFFF\" fill-rule=\"nonzero\">\n <polygon id=\"路径\" points=\"4 3.48 8 3.48 8 4.44 4 4.44\"></polygon>\n <path d=\"M9.98130841,0 L7.3271028,0 L7.96261682,0.96 L9.90654206,1.6 C10.2803738,1.72 10.5046729,2.08 10.5046729,2.44 L10.5046729,5.52 C10.5046729,5.92 10.2803738,6.24 9.90654206,6.36 L7.96261682,7 L7.36448598,8 L10.0186916,8 C11.1401869,8 12,7.04 12,5.88 L12,2.12 C12,0.96 11.1028037,0 9.98130841,0 Z\" id=\"路径\"></path>\n <path d=\"M2.05607477,6.4 C1.68224299,6.28 1.45794393,5.92 1.45794393,5.56 L1.45794393,2.44 C1.45794393,2.04 1.68224299,1.72 2.05607477,1.6 L4,0.96 L4.63551402,0 L1.98130841,0 C0.897196262,0 0,0.96 0,2.12 L0,5.84 C0,7.04 0.897196262,7.96 1.98130841,7.96 L4.63551402,7.96 L4,7 L2.05607477,6.4 Z\" id=\"路径\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "火山引擎",

"key": "volcengine",

"options":{

"llmEndpoint":"https://ark.cn-beijing.volces.com",

"chatPath":"/api/v3/chat/completions",

"embedPath":"/api/v3/embeddings",

"multimodalEmbedPath":"/api/v3/embeddings/multimodal",

"modelList":[

{

"llmModel":"doubao-seed-1-6-250615",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"doubao-seed-1.6",

"description":"全新多模态深度思考模型,同时支持 thinking、non-thinking、auto三种思考模式。其中 non-thinking 模型对比 doubao-1-5-pro-32k-250115 模型大幅提升。"

},

{

"llmModel":"doubao-seed-1-6-flash-250615",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"doubao-seed-1.6-flash",

"description":"有极致推理速度的多模态深度思考模型;同时支持文本和视觉理解。文本理解能力超过上一代 Lite 系列模型,视觉理解比肩友商 Pro 系列模型。"

},

{

"llmModel":"doubao-seed-1-6-thinking-250715",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"doubao-seed-1.6-thinking",

"description":"在思考能力上进行了大幅强化, 对比 doubao 1.5 代深度理解模型,在编程、数学、逻辑推理等基础能力上进一步提升, 支持视觉理解。"

},

{

"llmModel":"deepseek-r1-250528",

"supportChat":true,

"supportFunctionCalling":true,

"title":"deepseek-r1",

"description":"deepseek-r1 在后训练阶段大规模使用了强化学习技术,在数学、代码、自然语言推理等任务上,能力比肩 OpenAI o1 正式版。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>火山引擎</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"编组-48\" transform=\"translate(-1.6077, -0.6188)\">\n <g id=\"编组-4备份-12\" fill=\"#3CC8F9\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\"></path>\n </g>\n <g id=\"编组-8\" transform=\"translate(5, 4)\" fill-rule=\"nonzero\">\n <path d=\"M11.8380905,6.4607571 L9.95316879,13.8336926 C9.94855284,13.8539395 9.94866824,13.8749301 9.95345729,13.8950627 C9.95830403,13.9152525 9.96770902,13.9340696 9.98092217,13.9501413 C9.99419301,13.9661559 10.0109835,13.9789676 10.0299666,13.987604 C10.0490074,13.9962404 10.0697214,14.0004728 10.0906663,14 L13.859125,14 C13.8800121,14.0004728 13.9007839,13.9962404 13.919767,13.987604 C13.9388077,13.9789676 13.9555983,13.9661559 13.9688114,13.9501413 C13.9820822,13.9340696 13.9914872,13.9152525 13.9962763,13.8950627 C14.001123,13.8749301 14.0012384,13.8539395 13.9966225,13.8336926 L12.102065,6.4607571 C12.0937563,6.43244564 12.0763888,6.40762305 12.0525589,6.38989264 C12.0287868,6.37221942 11.9998217,6.36266788 11.9700489,6.36266788 C11.9403337,6.36266788 11.9113687,6.37221942 11.8875965,6.38989264 C11.8637667,6.40762305 11.8463992,6.43244564 11.8380905,6.4607571 L11.8380905,6.4607571 Z\" id=\"路径\" fill=\"#E0F3FA\"></path>\n <path d=\"M1.46346815,8.1313625 L0.00337777244,13.8334638 C-0.00122944792,13.8536536 -0.00112221372,13.8746441 0.00369130736,13.8948339 C0.00850482727,13.9150237 0.0178977417,13.9338408 0.0311552592,13.9498554 C0.044412719,13.9658699 0.0611851643,13.9787388 0.0801965167,13.9873752 C0.0992072922,13.9960116 0.119955971,14.0002441 0.140862747,13.9997712 L3.05004972,13.9997712 C3.07095996,14.0002441 3.09170864,13.9960116 3.1107148,13.9873752 C3.12972673,13.9787388 3.14649993,13.9658699 3.15975923,13.9498554 C3.17301277,13.9338408 3.18240622,13.9150237 3.18722411,13.8948339 C3.19203624,13.8746441 3.1921401,13.8536536 3.18753569,13.8334638 L1.72606935,8.1313625 C1.71736252,8.10356579 1.69992578,8.07931516 1.67630367,8.0620423 C1.65268157,8.04476945 1.62410886,8.03550388 1.59476875,8.03550388 C1.56542864,8.03550388 1.53685593,8.04476945 1.51323382,8.0620423 C1.48961172,8.07931516 1.47217498,8.10356579 1.46346815,8.1313625 L1.46346815,8.1313625 Z\" id=\"路径\" fill=\"#E0F3FA\"></path>\n <path d=\"M4.03854889,3.41026218 L1.37821162,13.8331778 C1.37372838,13.8528528 1.3737053,13.8732714 1.37813661,13.8929465 C1.38256792,13.9126215 1.39134976,13.9310382 1.40384166,13.9469384 C1.41633357,13.9628386 1.43222973,13.9758218 1.45038194,13.9849158 C1.46853415,13.9939526 1.48849235,13.9989286 1.50881983,13.9994852 L6.83088491,13.9994852 C6.85177207,13.9999581 6.87254383,13.9957257 6.89152691,13.9870892 C6.91056769,13.9784528 6.92730049,13.9656412 6.94057134,13.9496266 C6.95384219,13.933612 6.96324718,13.9147377 6.96803622,13.8946051 C6.97288296,13.8744153 6.97294066,13.8534248 6.96838242,13.8331778 L4.30114431,3.41026218 C4.29244325,3.38248263 4.27500651,3.35819768 4.25138441,3.3409477 C4.22775653,3.32370345 4.19918959,3.31439784 4.16984948,3.31439784 C4.14050937,3.31439784 4.11193667,3.32370345 4.08831456,3.3409477 C4.06468668,3.35819768 4.04724995,3.38248263 4.03854889,3.41026218 Z\" id=\"路径\" fill=\"#FFFFFF\"></path>\n <path d=\"M8.62781516,0.0958586275 C8.61910257,0.0680847913 8.60167737,0.043799843 8.57807834,0.0265498657 C8.55442162,0.00930560781 8.52586045,0 8.49654919,0 C8.46718023,0 8.43861906,0.00930560781 8.41502003,0.0265498657 C8.39136331,0.043799843 8.37393811,0.0680847913 8.36522551,0.0958586275 L4.72874251,13.833235 C4.7241381,13.8534248 4.72424196,13.8744153 4.72905409,13.8946051 C4.73387198,13.9147949 4.74326543,13.933612 4.75651897,13.9496266 C4.76977827,13.9656412 4.78655147,13.97851 4.8055634,13.9871464 C4.82457533,13.9957829 4.84532401,13.9999581 4.86622847,13.9995344 L12.1336842,13.9995344 C12.154629,13.9999581 12.1753431,13.9957829 12.1943839,13.9871464 C12.213367,13.97851 12.2301575,13.9656412 12.2434283,13.9496266 C12.2566415,13.933612 12.2660465,13.9147949 12.2708932,13.8946051 C12.2756822,13.8744153 12.2757976,13.8534248 12.2711817,13.833235 L8.62781516,0.0958586275 Z\" id=\"路径\" fill=\"#FFFFFF\"></path>\n <path d=\"M5.95650926,4.92616114 L3.64948807,13.8322627 C3.64463556,13.8525669 3.64456055,13.873729 3.64925727,13.8940904 C3.65395977,13.9143945 3.66331283,13.9334404 3.67660099,13.9496266 C3.68988337,13.9658127 3.70674889,13.978796 3.72588198,13.9874896 C3.74502085,13.9961832 3.76591955,14.0004728 3.78697404,13.9999514 L8.38997852,13.9999514 C8.41103878,14.0004728 8.43192594,13.9961832 8.45108211,13.9874896 C8.47018059,13.978796 8.4870865,13.9658127 8.50035734,13.9496266 C8.51362819,13.9334404 8.52297548,13.9143945 8.52770683,13.8940904 C8.53238047,13.873729 8.53232277,13.8525669 8.52747603,13.8322627 L6.21909891,4.92616114 C6.21038631,4.89836443 6.19296111,4.87411379 6.16930439,4.85684094 C6.14570536,4.83956808 6.11714419,4.83030251 6.08777523,4.83030251 C6.05846397,4.83030251 6.0299028,4.83956808 6.00624608,4.85684094 C5.98264705,4.87411379 5.96522185,4.89836443 5.95650926,4.92616114 Z\" id=\"路径\" fill=\"#E0F3FA\"></path>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "百度千帆",

"key": "baidu",

"options":{

"llmEndpoint":"https://qianfan.baidubce.com",

"chatPath":"/v2/chat/completions",

"embedPath":"/v2/embeddings",

"rerankPath":"/v2/rerank",

"modelList":[

{

"llmModel":"ernie-x1-turbo-32k",

"supportChat":true,

"supportFunctionCalling":true,

"title":"ERNIE X1 Turbo",

"description":"核心定位:深度思考模型,具备更强的理解、规划、反思、进化能力。适用场景: 在中文知识问答、文学创作、文稿写作、日常对话、逻辑推理、复杂计算及工具调用等方面表现尤为出色。"

},

{

"llmModel":"ernie-4.5-turbo-128k",

"supportChat":true,

"supportFunctionCalling":true,

"title":"ERNIE 4.5 Turbo",

"description":"核心定位:更好的满足多轮长历史对话处理、长文档理解问答任务。适用场景:1)复杂语义理解:支持中文知识问答、文学创作,尤其擅长文档理解(如DocVQA任务)。 2)数学推理:在中文数学问题(CMath基准)表现突出。"

},

{

"llmModel":"ernie-4.5-turbo-vl-32k",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"ERNIE 4.5 Turbo VL",

"description":"核心定位:多模态基础模型,支持文本、图像跨模态输入与生成。适用场景:结合图文生成营销文案、视频脚本设计等。"

},

{

"llmModel":"deepseek-r1-250528",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1",

"description":"核心定位:专业优化推理模型,聚焦数学与逻辑任务。 适用场景: 复杂数学问题:如高等数学题求解、科学计算模拟。 逻辑拆解与规划:业务流程自动化、学术研究中的假设验证。 STEM领域应用:物理建模、金融量化分析等需高精度推理的场景。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>百度</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -432.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"百度\" transform=\"translate(16, 256)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#2176FF\"></path>\n <g id=\"百度学术\" transform=\"translate(6, 6)\" fill=\"#FFFFFF\" fill-rule=\"nonzero\">\n <rect id=\"矩形\" opacity=\"0\" x=\"0\" y=\"0\" width=\"12\" height=\"12\"></rect>\n <path d=\"M2.07466406,6.32308594 C3.40675781,6.04272656 3.22533984,4.485 3.18561328,4.14392578 C3.12039844,3.61919531 2.48921484,2.70090234 1.63313672,2.77361719 C0.555175781,2.86807031 0.397757812,4.39279688 0.397757812,4.39279688 C0.251578125,5.09744531 0.74709375,6.60344531 2.07541406,6.32308594 L2.07466406,6.32308594 Z M3.48921094,9.03298828 C3.45023437,9.14242969 3.36326953,9.42278906 3.43898437,9.66642187 C3.58741406,10.2136406 4.12114453,10.1244375 4.12114453,10.1244375 L4.87076953,10.1244375 L4.87076953,8.6251875 L4.12114453,8.6251875 C3.78605859,8.72264062 3.52519922,8.92354687 3.48921094,9.03298828 Z M4.54693359,3.70989844 C5.28307031,3.70989844 5.87751562,2.88080859 5.87751562,1.8568125 C5.87751562,0.833578125 5.28307031,0.00525 4.54693359,0.00525 C3.81229687,0.00525 3.21635156,0.833578125 3.21635156,1.8568125 C3.21635156,2.88080859 3.81304687,3.70989844 4.54693359,3.70989844 Z M7.71485156,3.83282813 C8.69835937,3.95728125 9.33028125,2.93028516 9.45622266,2.15217188 C9.58366406,1.37480859 8.94948047,0.471515625 8.25383203,0.316347656 C7.55593359,0.159667969 6.68561719,1.25337891 6.60615234,1.96626563 C6.51169922,2.83808203 6.73359375,3.70914844 7.71560156,3.83282813 L7.71485156,3.83282813 Z M10.1241445,8.40855469 C10.1241445,8.40855469 8.60390625,7.25636719 7.71485156,6.01198828 C6.5109375,4.17616406 4.80105469,4.92278906 4.22909766,5.85683203 C3.66012891,6.79011328 2.77257422,7.38005859 2.64589453,7.53747656 C2.51845312,7.69115625 0.809296875,8.59444922 1.18860937,10.2436289 C1.56792187,11.8942969 2.99820703,12 2.99820703,12 C2.99820703,12 3.88276172,11.9572734 5.02219922,11.7076406 C6.161625,11.4602695 7.14364453,11.7691172 7.14364453,11.7691172 C7.14364453,11.7691172 9.80705859,12.6416836 10.5356836,10.9617656 C11.262832,9.28110937 10.1241445,8.40930469 10.1241445,8.40930469 L10.1241445,8.40855469 Z M5.61889453,10.8748125 L3.74633203,10.8748125 C2.99895703,10.8568242 2.79055078,10.2646172 2.75307422,10.1791641 C2.71559766,10.0922109 2.50420312,9.69116016 2.61664453,9.009 C2.93899219,7.98501562 3.74633203,7.8755625 3.74633203,7.8755625 L4.87076953,7.8755625 L4.87076953,6.751125 L5.61964453,6.751125 L5.61964453,10.8740625 L5.61889453,10.8748125 Z M8.61215625,10.8748125 L6.74108203,10.8748125 C6.00420703,10.8425859 5.99145703,10.1664141 5.99145703,10.1664141 L5.99295703,7.8748125 L6.74108203,7.8748125 L6.74108203,9.748875 C6.79055859,9.95576953 7.11589453,10.1244375 7.11589453,10.1244375 L7.86401953,10.1244375 L7.86401953,7.8755625 L8.61215625,7.8755625 L8.61215625,10.8740625 L8.61215625,10.8748125 Z M11.6121562,5.13942187 C11.6121562,4.76687109 11.2958086,3.64617187 10.1241445,3.64617187 C8.94948047,3.64617187 8.7928125,4.70390625 8.7928125,5.45276953 C8.7928125,6.16641797 8.85427734,7.16266406 10.3130508,7.1319375 C11.7718242,7.10044922 11.6121562,5.51424609 11.6121562,5.13943359 L11.6121562,5.13942187 Z\" id=\"形状\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "星火大模型",

"key": "spark",

"options":{

"llmEndpoint":"https://spark-api-open.xf-yun.com",

"chatPath":"/v1/chat/completions",

"modelList":[

{

"llmModel":"generalv3",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Spark Pro",

"description":"专业级大语言模型,在医疗、教育和代码等场景进行专项优化,搜索场景延时更低。适用文本、智能问答等对性能和响应速度有更高要求的业务场景。"

},

{

"llmModel":"generalv3.5",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Spark Max",

"description":"旗舰级大语言模型,具有千亿级参数,核心能力全面升级,具备更强的数学、中文、代码和多模态能力。适用数理计算、逻辑推理等对效果有更高要求的业务场景。"

},

{

"llmModel":"4.0Ultra",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Spark4.0 Ultra",

"description":"最强大的大语言模型版本,文本生成、语言理解、知识问答、逻辑推理、数学能力等方面实现超越GPT4 Turbo,优化联网搜索链路,提供更精准回答。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>星火大模型</title>\n <defs>\n <path d=\"M5.17455646,0.0140870265 C5.16806555,0.0248199991 5.16741646,0.0382362148 5.17325828,0.0496399982 L5.17325828,0.0496399982 C5.42250937,0.558114574 5.55297674,1.12092482 5.55167855,1.6917848 L5.55167855,1.6917848 C5.55167855,3.68073879 4.00619198,5.29336792 2.10110879,5.29336792 L2.10110879,5.29336792 C1.3403737,5.29336792 0.600409524,5.03175171 0,4.54742632 L0,4.54742632 C0.0765927826,4.61719064 1.13720809,5.57577926 2.60415461,5.98564465 L2.60415461,5.98564465 C3.95621195,6.36465274 6.22154086,6.35794464 6.88556134,5.50668575 L6.88556134,5.50668575 C7.33343439,4.89624793 7.66901463,4.20531282 7.87737296,3.46943339 L7.87737296,3.46943339 L7.91047662,3.31514691 C8.32394783,1.17660212 6.24945179,0.171056751 5.20636194,0.000670810787 L5.20636194,0.000670810787 C5.20506376,0 5.20311648,0 5.2018183,0 L5.2018183,0 C5.19143284,0 5.18169647,0.00536648629 5.17455646,0.0140870265 L5.17455646,0.0140870265 Z\" id=\"path-1\"></path>\n <path d=\"M0.0201218327,0.00268324315 C0.00843818791,0.00737891865 0,0.0194535128 0,0.0335405393 L0,0.0335405393 L0.000649091378,2.70672152 C0.00778909653,2.88716963 0.116836448,4.58499173 1.71684669,5.59254953 L1.71684669,5.59254953 L1.79343948,5.64017709 L1.86938317,5.68981709 C2.10500334,5.83001655 2.63725827,6.10370735 3.44992067,6.29287599 L3.44992067,6.29287599 C4.52027235,6.5424176 5.40952754,6.87648137 5.91646791,8.00545593 L5.91646791,8.00545593 C5.92295882,8.02021377 5.9398352,8.02826349 5.95541339,8.02356782 L5.95541339,8.02356782 C5.97034249,8.01887214 5.98072795,8.0034435 5.97878068,7.98734404 L5.97878068,7.98734404 C5.82884057,6.62895219 5.2543947,5.35776575 4.34307041,4.36764903 L4.34307041,4.36764903 L4.04773383,4.06578418 L0.0558218585,0.00939135101 C0.0493309447,0.00335405393 0.0415418482,0 0.0331036603,0 L0.0331036603,0 C0.0285600206,0 0.0246654724,0.000670810787 0.0201218327,0.00268324315 L0.0201218327,0.00268324315 Z\" id=\"path-3\"></path>\n <path d=\"M4.58454552,0.0100621618 L1.97584727,2.66110639 L1.6727216,2.96967935 C0.595879002,4.1415858 -0.00323233973,5.69786682 0,7.31519163 L0,7.31519163 C0,10.7859666 2.69568961,13.5980055 6.02033565,13.5980055 L6.02033565,13.5980055 C7.96825887,13.5980055 9.70068376,12.6313671 10.8015427,11.1327758 L10.8015427,11.1327758 C10.1401186,11.9826931 7.87414062,11.9880596 6.52208328,11.6110639 L6.52208328,11.6110639 C5.05448768,11.2011985 3.99387236,10.2446223 3.91338503,10.1688207 L3.91338503,10.1688207 C3.17471905,9.5711283 2.69828597,8.69236617 2.5911859,7.72774026 L2.5911859,7.72774026 C2.48408582,6.76378516 2.75540602,5.7958052 3.3441319,5.04047225 L3.3441319,5.04047225 C3.57910297,5.50131926 3.90235048,5.98765708 4.34243443,6.48539869 L4.34243443,6.48539869 C4.68969832,6.87648137 5.0674695,7.23804839 5.47055525,7.56741648 L5.47055525,7.56741648 L5.53676257,7.62309378 C5.7224027,7.79951702 5.79510094,8.07387863 5.71850816,8.32744511 L5.71850816,8.32744511 C5.63347718,8.60985645 5.38227882,8.80304995 5.09667862,8.80707482 L5.09667862,8.80707482 C5.02787493,8.80707482 4.96036943,8.79567103 4.89610938,8.77353428 L4.89610938,8.77353428 C4.88118028,8.7688386 4.8643039,8.77621752 4.8571639,8.78963374 L4.8571639,8.78963374 C4.85002389,8.80439157 4.85262026,8.82183266 4.86495299,8.83189482 L4.86495299,8.83189482 C5.19209505,9.104244 5.59907534,9.25316399 6.01903746,9.25182237 L6.01903746,9.25182237 C7.04395275,9.25182237 7.87478971,8.38513483 7.87478971,7.31519163 L7.87478971,7.31519163 C7.87673699,6.81208354 7.6878514,6.32775815 7.35032388,5.96552033 L7.35032388,5.96552033 L7.21141832,5.84410357 L7.07640732,5.72268682 C6.33839042,5.04919279 5.81003004,4.38777336 5.43420613,3.76593176 L5.43420613,3.76593176 C4.83509479,2.77581504 4.62478918,1.88833236 4.57221278,1.21550915 L4.57221278,1.21550915 C4.52807457,0.647332409 4.59558007,0.232100532 4.63906919,0.040919458 L4.63906919,0.040919458 C4.64231465,0.0268324315 4.63582374,0.0114037834 4.623491,0.00469567551 L4.623491,0.00469567551 C4.61829827,0.00134162157 4.61245645,0 4.60726372,0 L4.60726372,0 C4.59882553,0 4.59103643,0.00335405393 4.58454552,0.0100621618 L4.58454552,0.0100621618 Z\" id=\"path-5\"></path>\n </defs>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -480.6188)\">\n <g id=\"星火大模型\" transform=\"translate(232, 176)\">\n <g id=\"编组-4备份-5\" transform=\"translate(16, 304)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#3CC8F9\"></path>\n <g id=\"编组\" transform=\"translate(6, 4)\">\n <g transform=\"translate(3.9186, 7.0254)\">\n <mask id=\"mask-2\" fill=\"white\">\n <use xlink:href=\"#path-1\"></use>\n </mask>\n <g id=\"Clip-2\"></g>\n <polygon id=\"Fill-1\" fill=\"#EEE5E5\" mask=\"url(#mask-2)\" points=\"0.000649091378 6.22579491 7.9650003 6.22579491 7.9650003 0 0.000649091378 0\"></polygon>\n </g>\n <g transform=\"translate(6.021, 0)\">\n <mask id=\"mask-4\" fill=\"white\">\n <use xlink:href=\"#path-3\"></use>\n </mask>\n <g id=\"Clip-4\"></g>\n <polygon id=\"Fill-3\" fill=\"#FFFFFF\" mask=\"url(#mask-4)\" points=\"0 8.02490944 5.97878068 8.02490944 5.97878068 0 0 0\"></polygon>\n </g>\n <g transform=\"translate(0, 1.402)\">\n <mask id=\"mask-6\" fill=\"white\">\n <use xlink:href=\"#path-5\"></use>\n </mask>\n <g id=\"Clip-6\"></g>\n <polygon id=\"Fill-5\" fill=\"#FFFFFF\" mask=\"url(#mask-6)\" points=\"1.3117161e-05 13.5980055 10.8015427 13.5980055 10.8015427 0 1.3117161e-05 0\"></polygon>\n </g>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "Gitee",

"key": "gitee",

"options":{

"llmEndpoint":"https://ai.gitee.com",

"chatPath":"/v1/chat/completions",

"embedPath":"/v1/embeddings",

"rerankPath":"/v1/rerank",

"modelList":[

{

"llmModel":"kimi-k2-instruct",

"supportChat":true,

"supportFunctionCalling":true,

"title":"kimi-k2-instruct",

"description":"Kimi K2 是一个最先进的混合专家 (MoE) 语言模型,激活参数为 320 亿,总参数为 1 万亿。通过 Muon 优化器进行训练,Kimi K2 在前沿知识、推理和编码任务上表现出色,同时在智能体能力方面进行了精心优化"

},

{

"llmModel":"ERNIE-4.5-Turbo",

"supportChat":true,

"supportFunctionCalling":true,

"title":"ERNIE-4.5-Turbo",

"description":"文心4.5 Turbo在去幻觉、逻辑推理和代码能力等方面也有着明显增强。对比文心4.5,速度更快、价格更低。"

},

{

"llmModel":"ERNIE-X1-Turbo",

"supportChat":true,

"supportFunctionCalling":true,

"title":"ERNIE-X1-Turbo",

"description":"文心ERNIE X1 Turbo具备更长的思维链,更强的深度思考能力,进一步增强了多模态和工具调用能力,擅长文学创作、逻辑推理等"

},

{

"llmModel":"DeepSeek-R1",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1",

"description":"DeepSeek-R1 是一款采用强化学习技术的推理模型,凭借少量标注数据大幅提升推理能力,性能媲美 OpenAI o1。"

},

{

"llmModel":"DeepSeek-V3",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-V3",

"description":"DeepSeek-V3 是 685B 参数的高效 MoE 语言模型,性能优越,训练稳定,超越开源模型,并接近顶级闭源模型。"

},

{

"llmModel":"Qwen3-235B-A22B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Qwen3-235B-A22B",

"description":"Qwen3 是 Qwen 系列中的最新一代大型语言模型,提供了一系列密集型和混合专家(MoE)模型。基于在训练数据、模型架构和优化技术方面的广泛进步。"

},

{

"llmModel":"Qwen3-30B-A3B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Qwen3-30B-A3B",

"description":"Qwen3 是 Qwen 系列中的最新一代大型语言模型,提供了一系列密集型和混合专家(MoE)模型。基于在训练数据、模型架构和优化技术方面的广泛进步。"

},

{

"llmModel":"Qwen3-32B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Qwen3-32B",

"description":"Qwen3 是 Qwen 系列中的最新一代大型语言模型,提供了一系列密集型和混合专家(MoE)模型。基于在训练数据、模型架构和优化技术方面的广泛进步。"

},

{

"llmModel":"ERNIE-4.5-Turbo-VL",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"ERNIE-4.5-Turbo-VL",

"description":"文心一言大模型全新版本,图片理解、创作、翻译、代码等能力显著提升,首次支持32K上下文长度,首Token时延显著降低。"

},

{

"llmModel":"Qwen2.5-VL-32B-Instruct",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"Qwen2.5-VL-32B-Instruct",

"description":"Qwen2.5-VL-32B-Instruct 是一款拥有 320 亿参数、支持多图输入与复杂图文推理的大规模多模态指令微调模型。"

},

{

"llmModel":"Qwen2-VL-72B-Instruct",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"Qwen2-VL-72B-Instruct",

"description":"Qwen2-VL-72B-Instruct是一款强大的视觉语言模型,支持图像与文本的多模态处理,能够精确识别图像内容并生成相关描述或回答。"

},

{

"llmModel":"InternVL3-78B",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"InternVL3-78B",

"description":"InternVL3-78B 是一款支持中英文、多图多轮对话的大规模多模态模型,具备超强图文理解、推理与生成能力,广泛适用于复杂 AI 应用场景。"

},

{

"llmModel":"InternVL3-38B",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"InternVL3-38B",

"description":"InternVL3-38B 是一款支持中英双语、多模态对话与图像理解的大规模视觉语言模型,具备强大的跨模态推理与视觉问答能力。"

},

{

"llmModel":"Qwen3-Embedding-8B",

"supportEmbed":true,

"title":"Qwen3-Embedding-8B",

"description":"Qwen3‑Embedding‑8B 是 Qwen 系列推出的大规模嵌入模型,专注于生成高质量、多语言及代码向量,支持多种下游任务中的语义匹配与信息检索需求。"

},

{

"llmModel":"Qwen3-Embedding-4B",

"supportEmbed":true,

"title":"Qwen3-Embedding-4B",

"description":"Qwen3-Embedding-4B 是由 Qwen 团队开发的一款高性能文本和代码嵌入模型,专为多语言、多模态任务设计,能够将文本和代码内容转换为语义丰富的向量表示。它广泛适用于语义搜索、跨语言检索、信息匹配、文本相似度分析等多种自然语言处理和代码理解场景。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>Gitee</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -624.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"编组-4备份-8\" transform=\"translate(16, 448)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#4285F4\"></path>\n <g id=\"gitee\" transform=\"translate(5, 5)\" fill=\"#FFFFFF\" fill-rule=\"nonzero\">\n <rect id=\"矩形\" opacity=\"0\" x=\"0\" y=\"0\" width=\"14\" height=\"14\"></rect>\n <path d=\"M7,14 C3.13389453,14 0,10.8661055 0,7 C0,3.13389453 3.13389453,0 7,0 C10.8661055,0 14,3.13389453 14,7 C14,10.8661055 10.8661055,14 7,14 Z M10.5430527,6.22230273 L6.5680918,6.22230273 C6.37711556,6.22231028 6.22230273,6.37712923 6.22230273,6.56810547 L6.22194727,7.43225 C6.22194727,7.623 6.37664453,7.77805273 6.56739453,7.77805273 L8.98764453,7.77805273 C9.17875,7.77805273 9.33344727,7.93275 9.33344727,8.1235 L9.33344727,8.29639453 C9.33344727,8.86914294 8.86914294,9.33344727 8.29639453,9.33344727 L5.012,9.33344727 C4.82115726,9.33325109 4.66655263,9.17848737 4.66655273,8.98764453 L4.66655273,5.70394727 C4.6665527,5.13133765 5.13064041,4.6670908 5.70325,4.66689453 L10.5423555,4.66689453 C10.7331929,4.66669836 10.8877953,4.51194297 10.8878027,4.32110547 L10.8888555,3.45694727 C10.8888555,3.26609909 10.7342426,3.11133317 10.5433945,3.11114453 L5.70325,3.11114453 C5.01562739,3.11114453 4.356173,3.38432494 3.86998244,3.8705796 C3.38379188,4.35683426 3.1106984,5.01632466 3.11078904,5.70394727 L3.11078904,10.5430664 C3.11078904,10.7341582 3.2655,10.8888691 3.45660547,10.8888691 L8.55539453,10.8888691 C9.8439331,10.8888691 10.8885,9.84430224 10.8885,8.55576367 L10.8885,6.56775 C10.8883038,6.37690726 10.7335401,6.22230263 10.5426973,6.22230273 L10.5430527,6.22230273 Z\" id=\"形状\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "硅基流动",

"key": "siliconlow",

"options":{

"llmEndpoint":"https://api.siliconflow.cn",

"chatPath":"/v1/chat/completions",

"embedPath":"/v1/embeddings",

"rerankPath":"/v1/rerank",

"modelList":[

{

"llmModel":"deepseek-ai/DeepSeek-R1",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-R1",

"description":"DeepSeek-R1-0528 是一款强化学习(RL)驱动的推理模型,解决了模型中的重复性和可读性问题。在 RL 之前,DeepSeek-R1 引入了冷启动数据,进一步优化了推理性能。它在数学、代码和推理任务中与 OpenAI-o1 表现相当,并且通过精心设计的训练方法,提升了整体效果。"

},

{

"llmModel":"deepseek-ai/DeepSeek-V3",

"supportChat":true,

"supportFunctionCalling":true,

"title":"DeepSeek-V3",

"description":"新版 DeepSeek-V3 (DeepSeek-V3-0324)与之前的 DeepSeek-V3-1226 使用同样的 base 模型,仅改进了后训练方法。新版 V3 模型借鉴 DeepSeek-R1 模型训练过程中所使用的强化学习技术,大幅提高了在推理类任务上的表现水平,在数学、代码类相关评测集上取得了超过 GPT-4.5 的得分成绩。此外该模型在工具调用、角色扮演、问答闲聊等方面也得到了一定幅度的能力提升。"

},

{

"llmModel":"moonshotai/Kimi-K2-Instruct",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Kimi-K2-Instruct",

"description":"Kimi K2 是一款具备超强代码和 Agent 能力的 MoE 架构基础模型,总参数 1T,激活参数 32B。在通用知识推理、编程、数学、Agent 等主要类别的基准性能测试中,K2 模型的性能超过其他主流开源模型"

},

{

"llmModel":"Tongyi-Zhiwen/QwenLong-L1-32B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"QwenLong-L1-32B",

"description":"QwenLong-L1-32B 是首个使用强化学习训练的长上下文大型推理模型(LRM),专门针对长文本推理任务进行优化。该模型通过渐进式上下文扩展的强化学习框架,实现了从短上下文到长上下文的稳定迁移。在七个长上下文文档问答基准测试中,QwenLong-L1-32B 超越了 OpenAI-o3-mini 和 Qwen3-235B-A22B 等旗舰模型,性能可媲美 Claude-3.7-Sonnet-Thinking。该模型特别擅长数学推理、逻辑推理和多跳推理等复杂任务"

},

{

"llmModel":"Qwen/Qwen3-30B-A3B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Qwen3-30B-A3B",

"description":"Qwen3-30B-A3B 是通义千问系列的最新大语言模型,采用混合专家(MoE)架构,拥有 30.5B 总参数量和 3.3B 激活参数量。该模型独特地支持在思考模式(适用于复杂逻辑推理、数学和编程)和非思考模式(适用于高效的通用对话)之间无缝切换,显著增强了推理能力。模型在数学、代码生成和常识逻辑推理上表现优异,并在创意写作、角色扮演和多轮对话等方面展现出卓越的人类偏好对齐能力。此外,该模型支持 100 多种语言和方言,具备出色的多语言指令遵循和翻译能力"

},

{

"llmModel":"Qwen/Qwen3-32B",

"supportChat":true,

"supportFunctionCalling":true,

"title":"Qwen3-32B",

"description":"Qwen3-32B 是通义千问系列的最新大语言模型,拥有 32.8B 参数量。该模型独特地支持在思考模式(适用于复杂逻辑推理、数学和编程)和非思考模式(适用于高效的通用对话)之间无缝切换,显著增强了推理能力。模型在数学、代码生成和常识逻辑推理上表现优异,并在创意写作、角色扮演和多轮对话等方面展现出卓越的人类偏好对齐能力。此外,该模型支持 100 多种语言和方言,具备出色的多语言指令遵循和翻译能力"

},

{

"llmModel":"MiniMaxAI/MiniMax-M1-80k",

"supportChat":true,

"supportFunctionCalling":true,

"title":"MiniMax-M1-80k",

"description":"MiniMax-M1 是开源权重的大规模混合注意力推理模型,拥有 4560 亿参数,每个 Token 可激活约 459 亿参数。模型原生支持 100 万 Token 的超长上下文,并通过闪电注意力机制,在 10 万 Token 的生成任务中相比 DeepSeek R1 节省 75% 的浮点运算量。同时,MiniMax-M1 采用 MoE(混合专家)架构,结合 CISPO 算法与混合注意力设计的高效强化学习训练,在长输入推理与真实软件工程场景中实现了业界领先的性能。"

},

{

"llmModel":"THUDM/GLM-4.1V-9B-Thinking",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"GLM-4.1V-9B-Thinking",

"description":"GLM-4.1V-9B-Thinking 是由智谱 AI 和清华大学 KEG 实验室联合发布的一款开源视觉语言模型(VLM),专为处理复杂的多模态认知任务而设计。该模型基于 GLM-4-9B-0414 基础模型,通过引入“思维链”(Chain-of-Thought)推理机制和采用强化学习策略,显著提升了其跨模态的推理能力和稳定性。作为一个 9B 参数规模的轻量级模型,它在部署效率和性能之间取得了平衡,在 28 项权威评测基准中,有 18 项的表现持平甚至超越了 72B 参数规模的 Qwen-2.5-VL-72B。该模型不仅在图文理解、数学科学推理、视频理解等任务上表现卓越,还支持高达 4K 分辨率的图像和任意宽高比输入"

},

{

"llmModel":"Qwen/Qwen2.5-VL-32B-Instruct",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"Qwen2.5-VL-32B-Instruct",

"description":"Qwen2.5-VL-32B-Instruct 是通义千问团队推出的多模态大模型,是 Qwen2.5-VL 系列的一部分。该模型不仅精通识别常见物体,还能分析图像中的文本、图表、图标、图形和布局。它可作为视觉智能体,能够推理并动态操控工具,具备使用电脑和手机的能力。此外,这个模型可以精确定位图像中的对象,并为发票、表格等生成结构化输出。相比前代模型 Qwen2-VL,该版本在数学和问题解决能力方面通过强化学习得到了进一步提升,响应风格也更符合人类偏好"

},

{

"llmModel":"Qwen/Qwen2.5-VL-72B-Instruct",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"Qwen2.5-VL-72B-Instruct",

"description":"Qwen2.5-VL 是 Qwen2.5 系列中的视觉语言模型。该模型在多方面有显著提升:具备更强的视觉理解能力,能够识别常见物体、分析文本、图表和布局;作为视觉代理能够推理并动态指导工具使用;支持理解超过 1 小时的长视频并捕捉关键事件;能够通过生成边界框或点准确定位图像中的物体;支持生成结构化输出,尤其适用于发票、表格等扫描数据。模型在多项基准测试中表现出色,包括图像、视频和代理任务评测"

},

{

"llmModel":"deepseek-ai/deepseek-vl2",

"supportChat":true,

"supportFunctionCalling":true,

"multimodal":true,

"title":"deepseek-vl2",

"description":"DeepSeek-VL2 是一个基于 DeepSeekMoE-27B 开发的混合专家(MoE)视觉语言模型,采用稀疏激活的 MoE 架构,在仅激活 4.5B 参数的情况下实现了卓越性能。该模型在视觉问答、光学字符识别、文档/表格/图表理解和视觉定位等多个任务中表现优异,与现有的开源稠密模型和基于 MoE 的模型相比,在使用相同或更少的激活参数的情况下,实现了具有竞争力的或最先进的性能表现"

},

{

"llmModel":"Qwen/Qwen3-Embedding-8B",

"supportEmbed":true,

"title":"Qwen3-Embedding-8B",

"description":"Qwen3-Embedding-8B 是 Qwen3 嵌入模型系列的最新专有模型,专为文本嵌入和排序任务设计。该模型基于 Qwen3 系列的密集基础模型,具有 80 亿参数规模,支持长达 32K 的上下文长度,可生成最高 4096 维的嵌入向量。该模型继承了基础模型卓越的多语言能力,支持超过 100 种语言,具备长文本理解和推理能力。在 MTEB 多语言排行榜上排名第一(截至 2025 年 6 月 5 日,得分 70.58),在文本检索、代码检索、文本分类、文本聚类和双语挖掘等多项任务中表现出色。模型支持用户自定义输出维度(32 到 4096)和指令感知功能,可根据特定任务、语言或场景进行优化"

},

{

"llmModel":"Qwen/Qwen3-Embedding-4B",

"supportEmbed":true,

"title":"Qwen3-Embedding-4B",

"description":"Qwen3-Embedding-4B 是 Qwen3 嵌入模型系列的最新专有模型,专为文本嵌入和排序任务设计。该模型基于 Qwen3 系列的密集基础模型,具有 40 亿参数规模,支持长达 32K 的上下文长度,可生成最高 2560 维的嵌入向量。模型继承了基础模型卓越的多语言能力,支持超过 100 种语言,具备长文本理解和推理能力。在 MTEB 多语言排行榜上表现卓越(得分 69.45),在文本检索、代码检索、文本分类、文本聚类和双语挖掘等多项任务中表现出色。模型支持用户自定义输出维度(32 到 2560)和指令感知功能,可根据特定任务、语言或场景进行优化,在效率和效果之间达到良好平衡"

},

{

"llmModel":"BAAI/bge-m3",

"supportEmbed":true,

"title":"bge-m3",

"description":"BGE-M3 是一个多功能、多语言、多粒度的文本嵌入模型。它支持三种常见的检索功能:密集检索、多向量检索和稀疏检索。该模型可以处理超过100种语言,并且能够处理从短句到长达8192个词元的长文档等不同粒度的输入。BGE-M3在多语言和跨语言检索任务中表现出色,在 MIRACL 和 MKQA 等基准测试中取得了领先结果。它还具有处理长文档检索的能力,在 MLDR 和 NarritiveQA 等数据集上展现了优秀性能"

},

{

"llmModel":"netease-youdao/bce-embedding-base_v1",

"supportEmbed":true,

"title":"bce-embedding-base_v1",

"description":"bce-embedding-base_v1 是由网易有道开发的双语和跨语言嵌入模型。该模型在中英文语义表示和检索任务中表现出色,尤其擅长跨语言场景。它是为检索增强生成(RAG)系统优化的,可以直接应用于教育、医疗、法律等多个领域。该模型不需要特定指令即可使用,能够高效地生成语义向量,为语义搜索和问答系统提供关键支持"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>硅基流动</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-259.6077, -755.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"编组-7\" transform=\"translate(26, 579)\">\n <rect id=\"矩形\" x=\"0\" y=\"0\" width=\"24\" height=\"24\"></rect>\n <g id=\"编组-4备份-11\" transform=\"translate(1.6077, 0.6188)\" fill=\"#4C6CFF\">\n <path d=\"M12.3923048,0.535898385 L18.7846097,4.22649731 C20.022214,4.94102849 20.7846097,6.26153656 20.7846097,7.69059892 L20.7846097,15.0717968 C20.7846097,16.5008591 20.022214,17.8213672 18.7846097,18.5358984 L12.3923048,22.2264973 C11.1547005,22.9410285 9.62990915,22.9410285 8.39230485,22.2264973 L2,18.5358984 C0.762395693,17.8213672 2.30038211e-13,16.5008591 2.28261854e-13,15.0717968 L2.24265051e-13,7.69059892 C2.22932783e-13,6.26153656 0.762395693,4.94102849 2,4.22649731 L8.39230485,0.535898385 C9.62990915,-0.178632795 11.1547005,-0.178632795 12.3923048,0.535898385 Z M17.7657562,7.38119785 L10.4753975,7.38119785 C10.1293642,7.38119785 9.84884885,7.66171323 9.84884885,8.00774649 L9.84884885,10.3406195 C9.84884885,10.6866528 9.56833347,10.9671681 9.22230021,10.9671681 L3.02001444,10.9556768 C2.70543929,10.9550939 2.44458148,11.1864419 2.39927086,11.4884894 L2.39230511,11.5816439 L2.39230485,14.7546492 C2.39230485,15.1006825 2.67282023,15.3811978 3.01885349,15.3811978 L10.1153623,15.3811978 C10.4613956,15.3811978 10.7419109,15.1006825 10.7419109,14.7546492 L10.7419109,12.4185414 C10.7419109,12.0725081 11.0224263,11.7919927 11.3684596,11.7919927 L17.7657562,11.7919927 C18.1117895,11.7919927 18.3923048,11.5114774 18.3923048,11.1654441 L18.3923048,8.00774649 C18.3923048,7.66171323 18.1117895,7.38119785 17.7657562,7.38119785 Z\" id=\"形状结合\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

},

{

"title": "Ollama",

"key": "ollama",

"options":{

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\">\n <title>编组 4备份 9</title>\n <g id=\"页面-1\" stroke=\"none\" stroke-width=\"1\" fill=\"none\" fill-rule=\"evenodd\">\n <g id=\"大模型\" transform=\"translate(-249.6077, -672.6188)\">\n <g id=\"编组-11备份-5\" transform=\"translate(232, 176)\">\n <g id=\"编组-4备份-9\" transform=\"translate(16, 496)\">\n <path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\" id=\"多边形\" fill=\"#4285F4\"></path>\n <g id=\"编组\" transform=\"translate(7, 6)\" fill=\"#FFFFFF\">\n <path d=\"M8.16182406,3.02233613 C8.22795573,2.25719328 8.23411396,1.52067227 7.77546421,0.800537815 C7.74672578,0.75547479 7.68026124,0.754109244 7.65030226,0.798407563 C7.62433781,0.836806723 7.60847065,0.860239496 7.59282541,0.883890756 C7.22222176,1.44294538 7.18294221,2.07710504 7.18532784,2.71465126 C7.18560523,2.78462185 7.32607955,2.89397479 7.41850854,2.91598739 C7.6499139,2.97110084 7.89108367,2.98655882 8.16182406,3.02233613 L8.16182406,3.02233613 Z M1.88397584,3.01894958 C2.15488266,2.9839916 2.38295925,2.94073109 2.6127557,2.92904202 C2.81148358,2.91893697 2.89081939,2.80155462 2.86812824,2.64768487 C2.79445135,2.14718487 2.70917922,1.64734034 2.59871937,1.15361345 C2.56715148,1.01257983 2.44110186,0.819327731 2.31754882,0.716638655 C1.81740033,1.46818067 1.8060825,2.22436555 1.88397584,3.01894958 L1.88397584,3.01894958 Z M8.98941307,13 C9.00111926,12.9018445 9.00417064,12.8017773 9.02591864,12.7058067 C9.15779362,12.1241933 9.11008117,11.5607143 8.80061603,11.0447017 C8.61847654,10.7410042 8.62613273,10.5081513 8.8289661,10.198937 C9.39047501,9.34317647 9.30204054,8.44912605 8.83290515,7.58517227 C8.67767326,7.29928151 8.66053007,7.10586555 8.87584635,6.83018908 C9.76224372,5.69542017 8.99218705,3.88410504 7.55415613,3.67665126 C7.52674922,3.67266387 7.49756695,3.66632773 7.47182443,3.66905882 C7.04590763,3.71428571 6.830203,3.55484454 6.59447023,3.20712185 C5.79700663,2.03073109 3.93233769,2.16362605 3.27734568,3.4232605 C3.15856388,3.6517437 3.03173755,3.7097521 2.78052597,3.679 C1.927028,3.57439916 1.04745461,4.27301261 0.836299304,5.21354622 C0.691109223,5.86021429 0.777879298,6.45788655 1.26338117,6.96308403 C1.43292679,7.13951261 1.35858414,7.32342437 1.24740305,7.5117605 C0.854274691,8.17781933 0.721622997,8.88970588 0.947092041,9.63638655 C1.01904907,9.87470168 1.15491858,10.0996891 1.2912874,10.3123319 C1.41994457,10.512958 1.4399727,10.6993277 1.31825048,10.8983697 C0.951363969,11.4985 0.853109619,12.1332059 1.04984024,12.8101891 C1.06698343,12.8690714 1.05350189,12.9365294 1.05433408,13 L0.277453418,13 C0.279173285,12.9733445 0.287051387,12.9454328 0.281836305,12.9200882 C0.128435245,12.1706218 0.167159998,11.4434412 0.52478142,10.7481597 C0.551356142,10.696542 0.545530785,10.6045588 0.516071124,10.5527227 C-0.0763953922,9.51102941 -0.0331213138,8.45748319 0.438788059,7.39104622 C0.464475108,7.33309244 0.475016229,7.25143277 0.4547107,7.19369748 C0.308632946,6.77857143 0.0554795877,6.5 0,5.95378151 L0,5.46218487 C0.123664001,4.6730084 0.463420996,4.00236134 1.09228212,3.47935714 C1.1515898,3.42997899 1.15303227,3.28392017 1.14238019,3.18691176 C1.06415397,2.47792017 1.06526356,1.77471849 1.27980313,1.08577311 C1.43337063,0.592428571 1.68247398,0.174134454 2.21962734,0 L2.38606611,0 C2.71123197,0.0870672269 2.98940662,0.253172269 3.13803644,0.554084034 C3.28211693,0.845710084 3.39163363,1.15547059 3.49560238,1.46402941 C3.5545217,1.63876471 3.57183133,1.82715546 3.61205403,2.03073109 C4.56497143,1.50537815 5.49081479,1.51389916 6.41449444,2.03526471 C6.5007652,1.69109244 6.56223659,1.3607395 6.66775876,1.04458824 C6.83186738,0.552609244 7.09839132,0.137319328 7.65768104,0 L7.82411981,0 C8.32726419,0.163428571 8.57936343,0.545945378 8.73986588,1.01121429 C8.97066097,1.68005882 8.99512746,2.37162605 8.9075252,3.05871429 C8.87107511,3.34444118 8.94103487,3.51316807 9.14730797,3.68446218 C10.059226,4.4417395 10.2602285,6.05417647 9.65084071,7.03747899 C9.55841172,7.18670588 9.55475007,7.29660504 9.62853792,7.45779412 C10.0154526,8.30355882 10.110822,9.1892521 9.75125876,10.0508571 C9.57322477,10.477563 9.52634452,10.8175294 9.70615386,11.254395 C9.93478524,11.8098992 9.86809878,12.4132521 9.76629373,13 L8.98941307,13 Z\" id=\"Fill-1\"></path>\n <path d=\"M4.59070286,8.06947143 C4.87170698,8.11994202 5.15964604,8.12005126 5.44081659,8.07034538 C5.62811567,8.03718992 5.81119831,7.99851765 5.97863571,7.92521513 C6.64145034,7.63517311 6.74669512,6.93371933 6.2379473,6.42644622 C5.61885058,5.80905546 4.45072786,5.80179076 3.82092359,6.41147983 C3.29408942,6.92137479 3.39672666,7.63468151 4.07041529,7.92843782 C4.23219377,7.99895462 4.40861886,8.03675294 4.59070286,8.06947143 M5.21024342,8.60820672 C5.03675875,8.62896303 4.86116585,8.62317311 4.68912365,8.59291261 C4.400075,8.54216891 4.10841881,8.49710588 3.8488853,8.38179916 C2.86273563,7.94367731 2.68675437,6.81027395 3.4550357,6.05993361 C4.20695056,5.32554286 5.55676892,5.25398824 6.40843607,5.90338739 C6.94852986,6.31518151 7.18082289,6.92470672 7.01161015,7.48605546 C6.83529602,8.07094622 6.24221923,8.51906387 5.5571018,8.57406807 C5.46661459,8.58133277 5.32802658,8.59411429 5.21024342,8.60820672\" id=\"Fill-3\"></path>\n <path d=\"M2.4434431,6.57873193 C2.16521296,6.57086639 1.96787207,6.37575714 1.9713118,6.11204286 C1.97558373,5.78682437 2.22108091,5.54151765 2.5263296,5.55735798 C2.78020419,5.57057647 2.98009715,5.77508067 2.97782248,6.01934958 C2.97515946,6.30485798 2.70641634,6.5861605 2.4434431,6.57873193\" id=\"Fill-5\"></path>\n <path d=\"M7.57402892,6.57948025 C7.31333034,6.57281639 7.05152217,6.27086681 7.06600234,5.99344244 C7.07931744,5.73966933 7.30395429,5.54554328 7.5713659,5.55679538 C7.85619811,5.56881218 8.08388633,5.8328542 8.07251302,6.13791723 C8.06280409,6.39737101 7.84926316,6.58647185 7.57402892,6.57948025\" id=\"Fill-7\"></path>\n <path d=\"M5.37064046,6.72264958 C5.30389851,6.64000672 5.18173246,6.62629664 5.09779184,6.69200672 L4.96541755,6.79573361 L4.83304325,6.69200672 C4.74910263,6.62629664 4.62693658,6.64000672 4.56019464,6.72264958 C4.49339722,6.80529244 4.50732259,6.92556975 4.59126321,6.99127983 L4.76491432,7.12728824 C4.7828897,7.14138067 4.80286236,7.15110336 4.82344528,7.15798571 L4.83115694,7.35402353 C4.83537339,7.45949832 4.92563868,7.5417042 5.03276977,7.53760756 C5.13990085,7.53351092 5.22339763,7.44464118 5.21923666,7.33911176 L5.21058184,7.12078824 C5.21024897,7.11199412 5.20847362,7.10363697 5.20692019,7.09511597 L5.33957189,6.99127983 C5.4235125,6.92556975 5.43743788,6.80529244 5.37064046,6.72264958\" id=\"Fill-9\"></path>\n </g>\n </g>\n </g>\n </g>\n </g>\n</svg>"

}

]配置说明

json

{

"title": "平台显示名称",

"key": "平台唯一标识符",

"options": {

// 平台配置选项

},

"icon": "SVG图标代码"

}options 配置项说明

| 字段 | 类型 | 说明 |

|---|---|---|

llmEndpoint | string | API服务地址 |

chatPath | string | 聊天接口路径 |

embedPath | string | 向量化接口路径 |

rerankPath | string | 重排序接口路径 |

multimodalEmbedPath | string | 多模态向量化接口路径 |

modelList | array | 可用模型列表 |

modelList 模型配置说明

| 字段 | 类型 | 说明 |

|---|---|---|

llmModel | string | 模型标识符 |

supportChat | boolean | 是否支持对话 |

supportFunctionCalling | boolean | 是否支持函数调用 |

supportEmbed | boolean | 是否支持向量化 |

multimodal | boolean | 是否支持多模态 |

title | string | 模型显示名称 |

description | string | 模型描述信息 |

新增平台示例

示例:新增 Anthropic Claude 平台(仅供参考)

json

{

"title": "Anthropic",

"key": "anthropic",

"options": {

"llmEndpoint": "https://api.anthropic.com",

"chatPath": "/v1/messages",

"modelList": [

{

"llmModel": "claude-3-5-sonnet-20241022",

"supportChat": true,

"supportFunctionCalling": true,

"multimodal": true,

"title": "Claude 3.5 Sonnet",

"description": "Claude 3.5 Sonnet是Anthropic最先进的模型,在推理、知识、编程、数学和视觉方面都有出色表现。它在复杂任务上表现卓越,同时保持高速度和强大的视觉能力。"

},

{

"llmModel": "claude-3-opus-20240229",

"supportChat": true,

"supportFunctionCalling": true,

"multimodal": true,

"title": "Claude 3 Opus",

"description": "Claude 3 Opus是Claude 3系列中最强大的模型,在高度复杂任务上表现出近乎人类的理解和流畅性。它在推理、数学、编程、创意写作等方面都有顶级表现。"

},

{

"llmModel": "claude-3-haiku-20240307",

"supportChat": true,

"supportFunctionCalling": true,

"multimodal": true,

"title": "Claude 3 Haiku",

"description": "Claude 3 Haiku是最快速、最紧凑的模型,专为近乎即时的响应而设计。它在简单查询和任务中表现出色,同时保持高准确性。"

}

]

},

"icon": "<svg width=\"20.7846097px\" height=\"22.7623957px\" viewBox=\"0 0 20.7846097 22.7623957\" version=\"1.1\" xmlns=\"http://www.w3.org/2000/svg\" xmlns:xlink=\"http://www.w3.org/1999/xlink\"><title>Anthropic</title><g fill=\"#FF8C00\"><path d=\"M14,1.15470054 L20.3923048,4.84529946 C21.6299092,5.55983064 22.3923048,6.88033872 22.3923048,8.30940108 L22.3923048,15.6905989 C22.3923048,17.1196613 21.6299092,18.4401694 20.3923048,19.1547005 L14,22.8452995 C12.7623957,23.5598306 11.2376043,23.5598306 10,22.8452995 L3.60769515,19.1547005 C2.37009085,18.4401694 1.60769515,17.1196613 1.60769515,15.6905989 L1.60769515,8.30940108 C1.60769515,6.88033872 2.37009085,5.55983064 3.60769515,4.84529946 L10,1.15470054 C11.2376043,0.440169359 12.7623957,0.440169359 14,1.15470054 Z\"/><text x=\"12\" y=\"14\" fill=\"white\" font-family=\"Arial\" font-size=\"8\" text-anchor=\"middle\">A</text></g></svg>"

}注意事项

- key字段唯一性:每个平台的

key必须唯一,用于系统内部识别 - API路径规范:确保

chatPath、embedPath等路径符合对应平台的API规范 - 模型标识符:

llmModel应使用平台官方的模型名称 - 图标格式:使用SVG格式

- 模型能力标识:根据模型实际能力设置

supportChat、supportFunctionCalling等字段